Self-healing test suites are an emerging approach to reduce time spent fighting flaky UI tests by combining test telemetry, selector heuristics, and lightweight ML to propose safe auto-patches that keep CI pipelines green.

Why UI Tests Flake — and Why It Hurts

Flaky UI tests typically arise from brittle selectors, timing/race conditions, visual shifts, or flaky third-party services. Each failure that isn’t a true regression costs developer time, blocks merges, and reduces confidence in the suite. Rather than repeatedly triaging the same class of failures, a self-healing strategy treats these failures as signals to be diagnosed and, where safe, automatically repaired or suggested to developers.

Observability: The Foundation for Safe Auto-Repair

Observability gives the signals needed to decide whether a failure is flaky and what a correct repair looks like. Capture rich telemetry from every test run:

- DOM snapshots and element metadata (attributes, tag, text, index) at key steps

- Network traces and API latencies to spot external instability

- Timing metrics (wait times, render durations, animation events)

- Failure context: stack traces, screenshots, full-page screenshots, and thumbnail diffs

- Historical pass/fail timeline for the same test and related tests

With this data, you can cluster similar failures, measure recurrence, and define confidence thresholds for automated fixes.

Lightweight ML and Heuristics: Finding the Right Fix

Lightweight ML models and selector heuristics work together to propose repairs without the complexity of large models. Typical approaches include:

- Selector similarity scoring: compare failed selectors against candidate elements using attribute overlap, DOM proximity, and rendered text similarity.

- DOM fingerprinting: encode element trees into stable fingerprints and use nearest-neighbor search to map old selectors to current elements.

- Heuristic rules: prefer data-* attributes, stable IDs, or aria labels over positional CSS selectors; degrade gracefully to text searches.

- Lightweight classification: a small model (e.g., logistic regression) that predicts whether a suggested selector will be stable based on historical acceptance and interaction success.

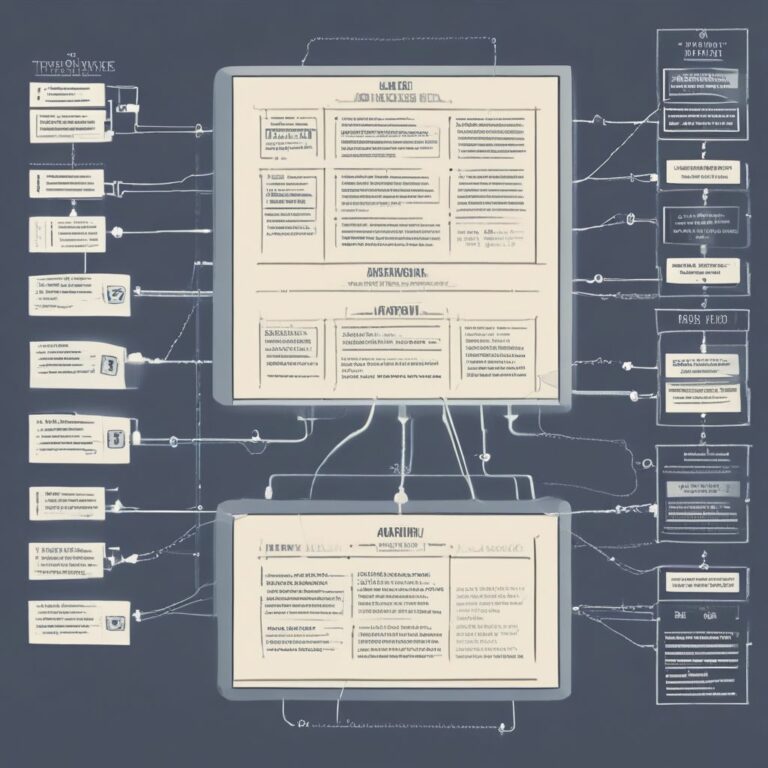

Example Candidate Ranking

When a selector fails, collect n candidates and score them by: attribute-match weight + DOM distance penalty + visual-text similarity + historical stability. The top candidate becomes a proposed patch if its score exceeds a safety threshold.

Safe Auto-Patches: Rules for Automation

Automation must be conservative. Use multiple safety gates to allow automatic application while preventing regressions:

- Confidence threshold: only auto-apply patches when model/heuristics report high confidence (e.g., > 95% on historical data).

- Canary runs: apply the patch in a canary pipeline or branch and require a few successful CI runs before merging to main.

- Behavioral verification: re-run the same test steps and assert that key post-conditions (API calls, navigation, visible text) match prior successful runs.

- Human-in-the-loop: for medium-confidence suggestions, create a PR with the proposed selector change and an annotated screenshot; allow the author to accept or reject.

- Automatic rollback: if subsequent runs show regressions, revert the change and tag the event as suspected false positive for model retraining.

Implementation Roadmap

Break the work into manageable phases to get value quickly and safely:

- Phase 1 — Telemetry & Storage: instrument tests to capture DOM snapshots, screenshots, and timings; store them indexed by test id and run id.

- Phase 2 — Heuristics Engine: implement selector similarity and rule-based candidate generation; expose a “suggestion” API.

- Phase 3 — Verification Harness: create a verification runner that executes candidate patches in isolation and asserts behavioral parity.

- Phase 4 — Automated Patch Workflow: integrate into CI to auto-apply high-confidence patches in a canary workflow, create PRs for medium-confidence fixes, and log all actions.

- Phase 5 — Feedback Loop & Retraining: use accept/reject/rollback events to refine heuristics and retrain lightweight models periodically.

Metrics to Track

Measure the health and safety of the self-healing system with these metrics:

- Flake rate (failures per 1,000 runs) before and after self-healing

- Auto-patch acceptance rate and rollback rate

- False positive rate (patches that masked real regressions)

- Mean time to resolution for flaky tests

- Developer overhead (time saved per week on test triage)

Pitfalls and Best Practices

- Do not auto-fix assertions or business logic — limit automation to selectors and non-functional waits.

- Prefer read-only verification assertions (UI appearance, API calls) rather than brittle state mutations when validating a patch.

- Keep models simple and explainable; developers must understand why a selector was chosen.

- Log every change with screenshots and the exact confidence score; traceability is essential for audits.

- Start small: pilot on the most flaky suite and expand once trust grows.

Real-World Example Flow

When test T fails with “element not found”:

- Collect the failure snapshot, screenshot, network logs, and last-known good DOM fingerprint.

- Generate candidate selectors using heuristics and score them with a lightweight model.

- Run the top candidate in a verification runner that checks the same behavioral post-conditions.

- If verification passes and confidence is high, auto-apply in a canary pipeline; otherwise open a PR with visuals for human review.

- Monitor the next N runs; if regressions appear, roll back and mark the case for retraining.

Adopting self-healing test suites is not about replacing developers — it’s about reducing pointless triage, increasing trust in CI, and turning flaky test noise into actionable data for continuous improvement.

Ready to reduce your CI noise and keep builds green? Start by instrumenting one flaky suite with telemetry and experiment with a conservative selector-heuristic engine.