The rise of Lidar and AR depth mapping has given mobile horror developers a radically expressive toolset—allowing games to scan a player’s room, understand its geometry, and seed procedurally placed threats that feel uncannily personal. Lidar and AR depth mapping turn walls, furniture, and shadows into design elements, creating scares that are not just cinematic but spatially believable. This shift is remaking how tension is authored: location-aware frights replace canned jump-scares, and the phone becomes both flashlight and forensic instrument in an intimate, haunted space.

How Depth Mapping Works in Mobile Horror

At a basic level, modern phones with depth sensors or dual-camera rigs generate a point cloud or mesh of the environment. Software translates that raw depth into readable surfaces, occlusion maps, and navigable floorplans. Mobile engines then anchor virtual objects to these surfaces, calculate line-of-sight occlusions, and predict player movement using inertial and camera data. For horror games, these technical outputs are the scaffolding for believable, location-aware encounters.

Key technical building blocks

- Point clouds & mesh reconstruction: Convert depth returns into a continuous model of the room.

- Plane detection & semantic segmentation: Identify floors, walls, and furniture to determine valid spawn points and hiding spaces.

- Occlusion & shadow mapping: Use depth data to hide or reveal threats naturally behind real-world objects.

- Anchoring & persistence: Keep virtual threats fixed relative to the physical space as the player moves.

- Procedural placement algorithms: Place scares using rules of proximity, line-of-sight, and narrative tension curves.

Design Patterns for Location-Aware Scares

Designers are discovering that successful depth-driven horror balances personalization with predictability. The main patterns include:

- Contextual spawns: Enemies or anomalies spawn behind couches, inside closets, or appear to slip through narrow gaps—places the depth map identifies as plausible concealment.

- Environmental puzzles as tension builders: Use the room’s real layout—blocked doorways, a narrow hallway—to create claustrophobic puzzles, timed safes, or escape routes that the player must find under stress.

- Adaptive threat choreography: Threat behavior adapts to the player’s commonly used space—if the player sits in the same chair, the game starts to target that area to increase unease.

- Layered sensory deception: Combine subtle audio cues with AR-projected shadows that align with depth occlusions so the brain expects a physical source of danger.

Practical Implementation Tips for Developers

To get the best results from Lidar and AR depth mapping, follow pragmatic steps that preserve immersion while handling real-world variability.

Scan & sanitize

Start with a quiet scanning sequence that guides the player to move their phone slowly. Use clear UI cues (on-screen outlines, progress rings) and give the option to rescan. Sanitize scans by smoothing noisy meshes and filtering dynamic objects so transient clutter (a pet, a passing person) doesn’t break level logic.

Spawn rules & safety checks

Define strict spawn rules: never place threats in positions that occlude real exits or risk encouraging hazardous behavior. Provide soft boundaries and in-game warnings when the player approaches dangerous real-world objects. Keep all interactions within a safe radius of the device and never require risky physical movement.

Performance and fallbacks

Depth processing can be expensive. Offer tiered experiences: full spatial mode for devices with Lidar or advanced depth sensors, 2D AR overlay mode for regular devices, and a non-AR fallback that uses virtual rooms when mapping is poor. Cache mesh data and stream only essential updates to preserve battery and frame rate.

UX Considerations and Player Comfort

Immersion can easily cross into discomfort. Thoughtful UX design protects players while preserving fear.

- Consent & calibration: Ask permission for room scanning and explain how depth data is used. Provide a calibration step to set a safe play zone.

- Opt-out intensity settings: Let players control how aggressively the game adapts to their space (from atmospheric to intense). This respects varying thresholds for fear and prevents motion sickness or panic.

- Clear safety overlays: Show persistent, minimal overlays indicating the play area’s boundaries and the position of real-world obstacles.

Ethical and Privacy Implications

Depth data is sensitive—it’s a structural fingerprint of someone’s private space. Developers must store and process scans locally whenever possible, avoid uploading raw depth captures, and make retention policies explicit. If cloud storage is necessary (for multiplayer or analytics), anonymize and compress mesh data to remove personally identifiable features and obtain explicit consent.

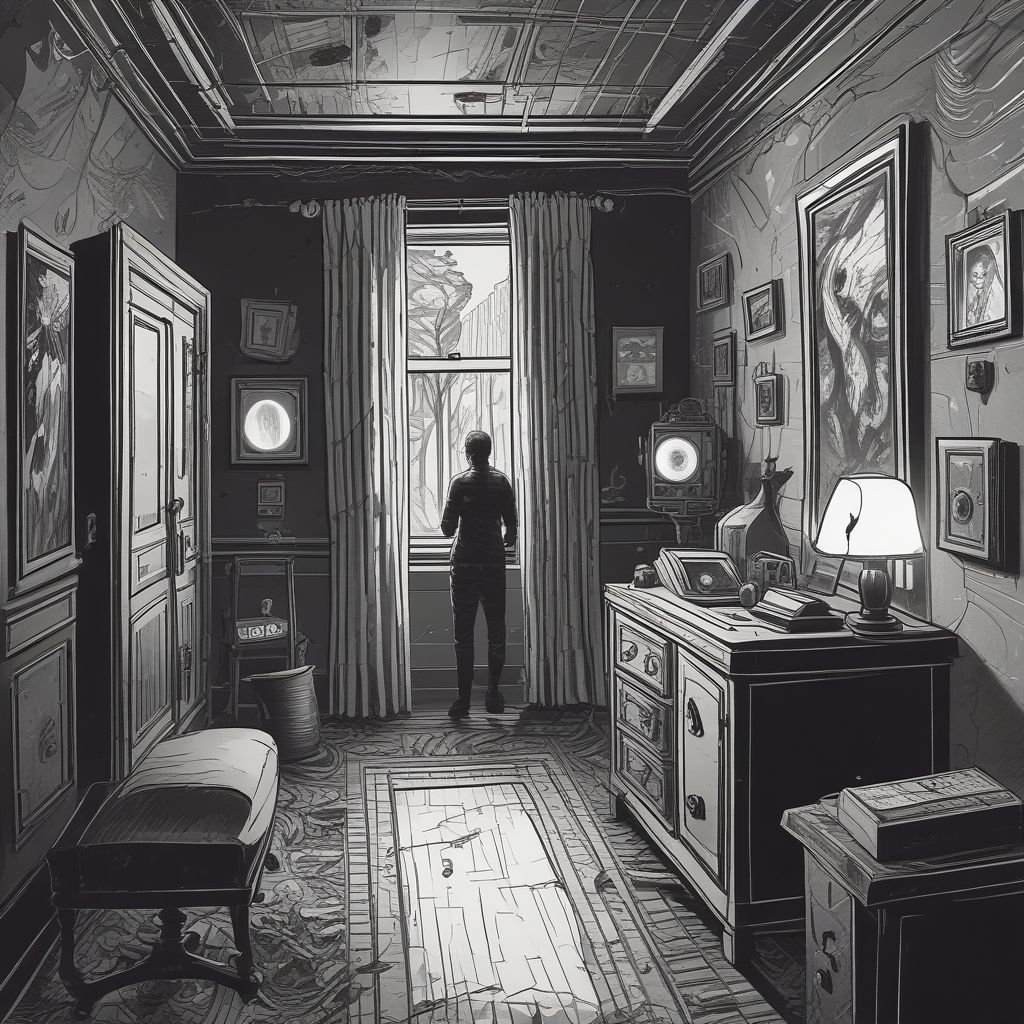

Examples: How a Scene Could Play Out

Imagine a protagonist sweeping their phone across a dim living room. The game detects a sofa and a narrow corridor. An unsettling hum grows as the player approaches the corridor; a shadow flits behind the sofa—then, using occlusion mapping, a specter appears to crawl under the coffee table and later emerge through the narrow corridor at chest height. Because the threats are anchored to real geometry, the scares feel physically plausible and the player’s own environment becomes a collaborator in the narrative.

Future Directions

As depth sensors and edge compute power improve, expect games that model real-time environmental changes (pets moving, lights switching), enable multi-player hauntings where each player’s scan augments a shared AR world, and use AI to craft personalized fear profiles that evolve across play sessions. The core opportunity is intimacy: horror that understands where you live and uses that familiarity to unsettle you in entirely new ways.

Depth Terror—when handled responsibly—offers designers a rare creative edge: the ability to write horror that feels uniquely authored for each player’s space. By combining robust depth mapping, careful design rules, and explicit privacy protections, mobile games can create experiences that are both terrifying and respectful.

Conclusion: Lidar and AR depth mapping are not just new technologies; they are new languages for fear—transforming mundane rooms into staged environments of dread and possibility. Developers who master spatial storytelling, ethical data handling, and player safety will lead a new, deeply personal era of mobile horror.

Ready to see your living room become a stage for terror? Try a depth-mapped demo and experience the difference.