The need to bridge vectors and relations is now central to real-time multimodal search and recommendation systems: combining dense embeddings from images, text, and user behavior with authoritative relational data enables more relevant, explainable, and cost-efficient results. This guide walks through practical patterns—hybrid indexes, batching strategies, and cost-aware query planning—plus a hands-on e-commerce case study showing how to join a vector database to SQL tables for fast, accurate recommendations.

Why combine vector search with SQL?

Vector search excels at semantic similarity for text, images, and user signals. SQL and relational tables store structured facts: prices, inventory, categories, transactions, and business rules. Combining them lets applications present semantically-relevant candidates (vectors) while enforcing constraints, sorting by numeric metrics, and joining rich metadata from relational sources. The result is high-quality, accountable search and recommendations that are production-ready.

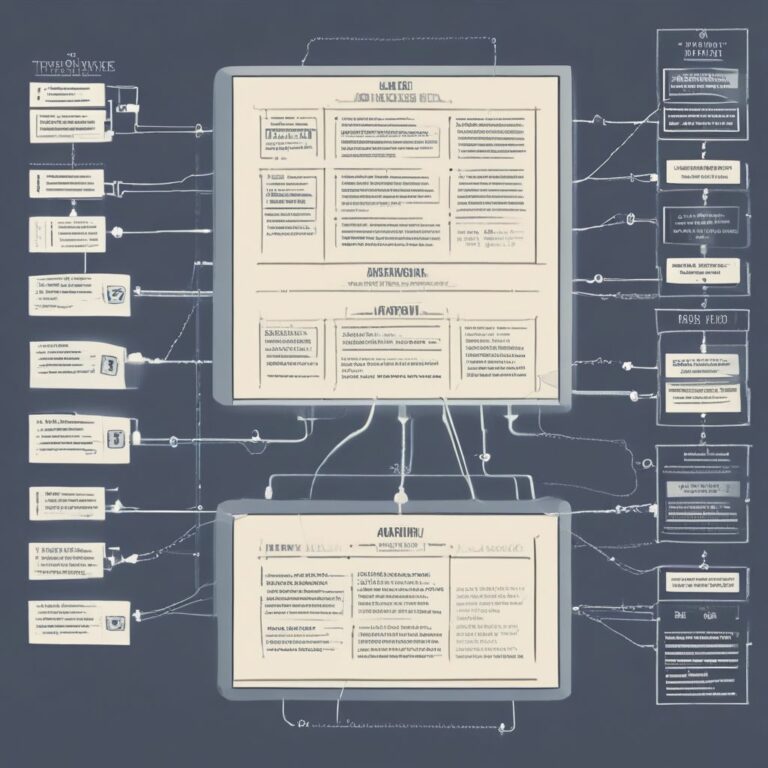

Key architectural patterns

1. Candidate Generation → Re-ranking (Two-stage)

Use the vector database to produce a compact set of semantically-relevant candidates, then enrich and re-rank via SQL. This pattern minimizes expensive vector queries and leverages relational joins for scoring, business rules, and freshness checks.

- Step 1: Query vector DB for top-K embeddings (e.g., K = 100).

- Step 2: Fetch relational metadata (price, stock, user history) for those K rows in a batched SQL query.

- Step 3: Re-rank candidates with a combined score (semantic similarity + business/CTR model + freshness penalty).

2. Hybrid Indexing (Hybrid Score at Search Time)

Some systems support hybrid indexes that combine vector distance with inverted or numeric filters to reduce candidate sets inside the vector store. Where supported, push simple relational filters (category, price range, flags) into the vector store’s filter expression to reduce network roundtrips.

3. Pre-join Embedding Keys to Relational IDs

Persist vector embeddings alongside or linked to the primary key used in the relational database (e.g., product_id, user_id). Use stable ID mapping to avoid expensive lookups and enable direct joins in the candidate stage.

4. Materialized Embedding Views

Create a periodically-updated materialized view or cache that stores (id, embedding, frequently-read attributes). This reduces join cost and allows single-store retrieval for many read paths. Be mindful of staleness windows and use incremental updates when possible.

Batching strategies for efficiency

Batching reduces latency and cost when moving data between the vector store and SQL database:

- Bulk fetch relational rows: Retrieve metadata for top-K candidates in one SQL IN(…) or join with a temp table rather than issuing one query per candidate.

- Asynchronous prefetch: Start SQL fetches in parallel with secondary vector queries (e.g., metadata for top-N while fetching top-K+margin for re-ranking).

- Micro-batching for concurrent requests: Aggregate similar queries from multiple users within a short window to amortize vector-search costs (sensible for recommendation feeds).

- Adaptive K selection: Dynamically choose K based on query type and downstream cost—higher K for cold-start or exploration, lower K for high-throughput paths.

Cost-aware query planning

Design query planners that estimate cost across both systems and pick a low-cost path:

- Estimate vector index latency (depends on dimensionality, index type, and K) and SQL join cardinality to compute expected end-to-end cost.

- Use predicate pushdown when filtering by high-selectivity relational predicates—if you can restrict candidates by a cheap relational filter, do that before the vector step.

- Choose index type adaptively: flat scans for small corpora, IVF/HNSW for larger ones; and adjust recall vs. latency based on business needs.

- Maintain usage metrics and feedback loop: track how many vector candidates survive SQL filters and adjust K and filters to minimize wasted work.

Implementation checklist

- Map stable IDs between systems and ensure consistent key formats (UUIDs vs integers).

- Design efficient batched SQL queries (use temporary tables, indexed joins, or JSON aggregates where helpful).

- Log candidate counts, re-ranking latencies, and filter rejection rates to drive tuning.

- Ensure embedding update pipelines keep vectors synchronized with relational updates (webhooks, CDC, or near-real-time streaming).

- Consider privacy and access controls: apply attribute-level ACLs in the relational step to avoid exposing sensitive metadata.

Case study: Multimodal e-commerce recommendations

Scenario: an online retailer needs “visual + behavioral” product recommendations on the product page that are up-to-date with stock and personalized for the logged-in user.

System components

- Vector DB: stores product image/text embeddings and supports filter expressions.

- Relational DB: product catalog (product_id, price, inventory, category), user history, and business rules.

- Feature Store / Cache: stores user-level personalization features and recent interactions.

- Re-ranker Service: combines similarity score, personalization features, and business penalties (e.g., low stock).

Flow (practical pipeline)

- User views product P; compute embedding for P (or use precomputed embedding).

- Vector DB query: find top-200 nearest neighbors with filter “is_active = true AND category = P.category” (filter pushed into vector store).

- Batched SQL: SELECT product_id, price, inventory, rating FROM products WHERE product_id IN (top-200 ids) in a single query.

- Fetch personalization signals for those product_ids from feature store in a batched request.

- Re-rank with a lightweight model: combined_score = alpha * sim_score + beta * personalization + gamma * log(rating) – delta * out_of_stock_penalty.

- Return top-10 with relational metadata to the client; asynchronously record which suggestions are shown for online learning.

Tuning tips and outcomes

- Start with K = 200 and measure filter rejection; if >70% of candidates are filtered out, reduce the vector K or strengthen pre-filters.

- Push exact-match filters (category, brand) into the vector store when available to cut candidate counts early.

- For cost-sensitive pages (homepage), lower recall and rely more on cached re-ranks; for product detail pages, allow higher K for quality.

Monitoring and evaluation

Track these signals: end-to-end latency, candidate survival rate after SQL filters, CTR of suggested items, and the computational cost per query. Use A/B experiments to quantify business lifts from changing K, model weights (alpha/beta), and batching windows. Instrument the pipeline to detect drifts between embeddings and relational updates (e.g., product discontinued but still in vector index).

Common pitfalls and how to avoid them

- Stale embeddings: use streaming or incremental rebuilds instead of full re-embeddings when possible.

- Mismatch of IDs: enforce canonical key generation and use schema migrations carefully.

- Over-filtering: aggressive predicates can eliminate semantically-relevant items—tune business penalties instead of hard exclusions when relevance matters.

- Cost surprises: simulate expected candidate set sizes to compute projected vector query costs before deploying wide changes.

Bridging vectors and relations combines the best of semantic understanding and structured authority. By using two-stage search, hybrid indexing, thoughtful batching, and cost-aware query planning, teams can deliver high-quality, scalable, and auditable search and recommendation experiences.

Conclusion: implementing these patterns turns disparate systems into a cohesive hybrid search platform—start small (two-stage candidate + batched SQL) and iterate with monitoring to balance cost and quality.

Ready to design a hybrid vector+SQL pipeline for your product? Contact the team to prototype a proof-of-concept.