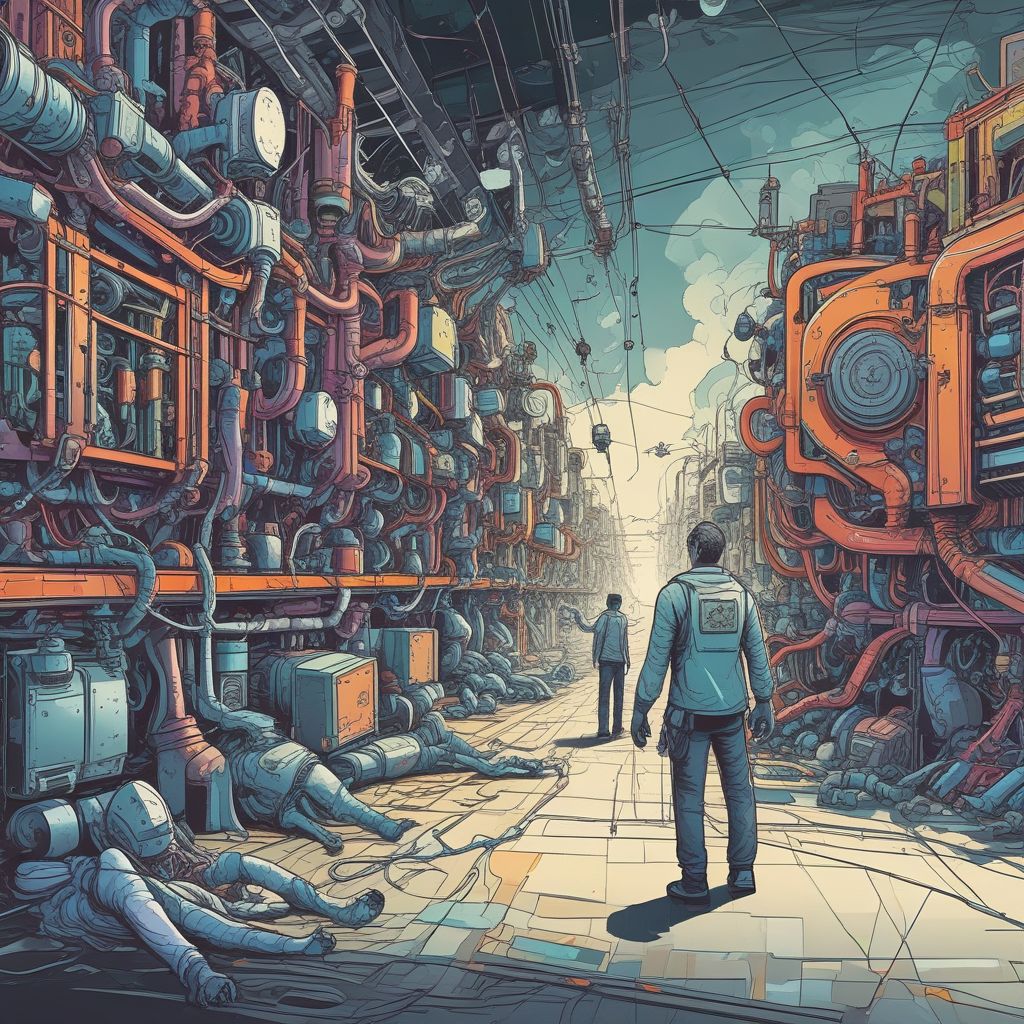

Chaos-Driven CI is an operational approach that intentionally injects controlled failures into continuous integration and continuous deployment (CI/CD) pipelines to prove and improve resilience. Instead of waiting for production incidents to reveal brittle deployments, flaky tests, or hidden state assumptions, teams schedule automated, low-risk failures that exercise observability, rollback procedures, and test hygiene—so problems are discovered and fixed early.

Why add chaos to CI?

Traditional chaos engineering focuses on production, but CI is where code, tests, and deployment contracts meet. Injecting failures into CI exposes classically invisible issues:

- Flaky tests that pass when run in isolation but fail under resource contention.

- Brittle deployment scripts that assume ordering, immutable state, or perfect networking.

- Hidden state assumptions such as reliance on an external service seeded with specific data.

- Gaps in observability and alerting when pipelines are stressed or partially failed.

A practical framework for Chaos-Driven CI

The framework below helps you design automated, scheduled failures that are safe, measurable, and actionable.

1. Define objectives and success criteria

- Objective examples: reduce flaky-test rate by 50% in 6 months, verify rollback completes within X minutes, or ensure artifacts are reproducible across runs.

- Success criteria should be measurable: MTTR for failed pipelines, percentage of flaky tests identified, or number of deployment scripts hardened.

2. Identify safe blast radius

Start small. Limit chaos to specific pipeline stages (unit test matrix, integration job on staging only, deployment job to canary environment) and to non-critical branches (feature or staging branches). Gradually expand once confidence grows.

3. Catalog failure modes to inject

Create a catalog mapped to your objectives:

- Test layer: artificially throttle CPU/memory, inject random sleep/delay in services, or kill containers mid-test to reveal race conditions.

- Build layer: simulate disk full, lock artifact store, corrupt an environment variable to detect brittle scripts.

- Deployment layer: fail a step, simulate slow registries, toggle feature flags, or force a rollback step to verify recovery.

- External dependencies: return 5xx responses from mocks, simulate DNS failure, or add latency to API calls.

4. Automate and schedule experiments

Integrate failure injection as parameterized jobs in your CI (Jenkins, GitHub Actions, GitLab CI). Schedule recurring “resilience runs”—for example, a weekly pipeline that runs integration and deployment jobs with randomized, low-risk failures. Keep a deterministic seed for replayability and debugging.

5. Instrument and measure

Observability is non-negotiable. Ensure logs, traces, and metrics are captured and correlated to each chaos run. Track:

- Failure detection time

- MTTR (mean time to recovery)

- Number of flaky tests detected per run

- Deployment rollback success rate

6. Enforce guardrails and safety checks

- Require a “chaos mode” flag for pipelines and limit runs to off-peak hours.

- Fail-safe toggles: an operator kill switch that disables chaos across the org.

- Notifications and runbooks: every experiment must have an owner, a runbook link, and alerting configured.

Implementation tips and CI integration patterns

Parameterize chaos jobs

Add options such as BLAST_RADIUS, INJECT_TYPE, and SEED to your CI job templates. That makes it easy to run deterministic experiments and scale them across projects.

Use lightweight emulation to avoid production risk

Prefer mocked services, simulated latency via proxy containers, or resource throttling within ephemeral runners rather than hitting production systems. For example, use a sidecar that drops a percentage of HTTP calls during a test job.

Leverage feature flags and canaries

When testing deployment resilience, deploy to a canary environment first or toggle a feature flag that triggers a non-destructive path. Validate rollback scripts by intentionally failing the final promotion step and verifying the rollback path.

Case studies

Case study 1: Surface flaky tests in a microservices repo

A mid-size SaaS engineering team scheduled weekly CI runs where each service’s integration tests ran under artificial CPU contention using container cgroups. Within two months they identified 37 flaky tests across three services; fixing those tests reduced their master-branch build flakiness from 12% to 2% and restored confidence in CI gating.

Case study 2: Proving deployment rollback in an e-commerce pipeline

An e-commerce platform added a scheduled “rollback verification” job in CI that deliberately injected a configuration mismatch at the promotion step to trigger a rollback. The test verified rollback hooks, artifact immutability, and notification workflows—uncovering a missing DB migration guard that would have caused order processing failures in production.

Playbooks and remediation

Create a short chaos playbook template for each experiment:

- Goal and expected outcome

- Blast radius and duration

- Owner and rollback path

- Observability checklist

- Postmortem template and action items

After each run, hold a short triage: classify issues as flaky tests, infrastructure gaps, or deployment script bugs; then assign remediation tasks and schedule follow-up verification runs.

Measuring ROI

Quantify the value by tracking reduced incidents, faster recovery times, and lower mean time to detect. Present improvements as tangible KPIs: fewer hotfixes, fewer production rollbacks, and higher confidence in merging to mainline.

Adopting Chaos-Driven CI is a pragmatic way to shift resilience left—turning your CI system into a proving ground rather than a brittle gatekeeper. With safe experiments, measured outcomes, and accountable remediation, teams can uncover hidden assumptions early, fix flaky behavior, and make deployments predictable.

Conclusion: Start with a small, scheduled experiment in a non-critical pipeline, measure outcomes, and iterate; Chaos-Driven CI will pay dividends by revealing and fixing the failures that otherwise hide until production.

Ready to prove your pipeline’s resilience? Schedule a low-blast-radius chaos run this week and document the results.