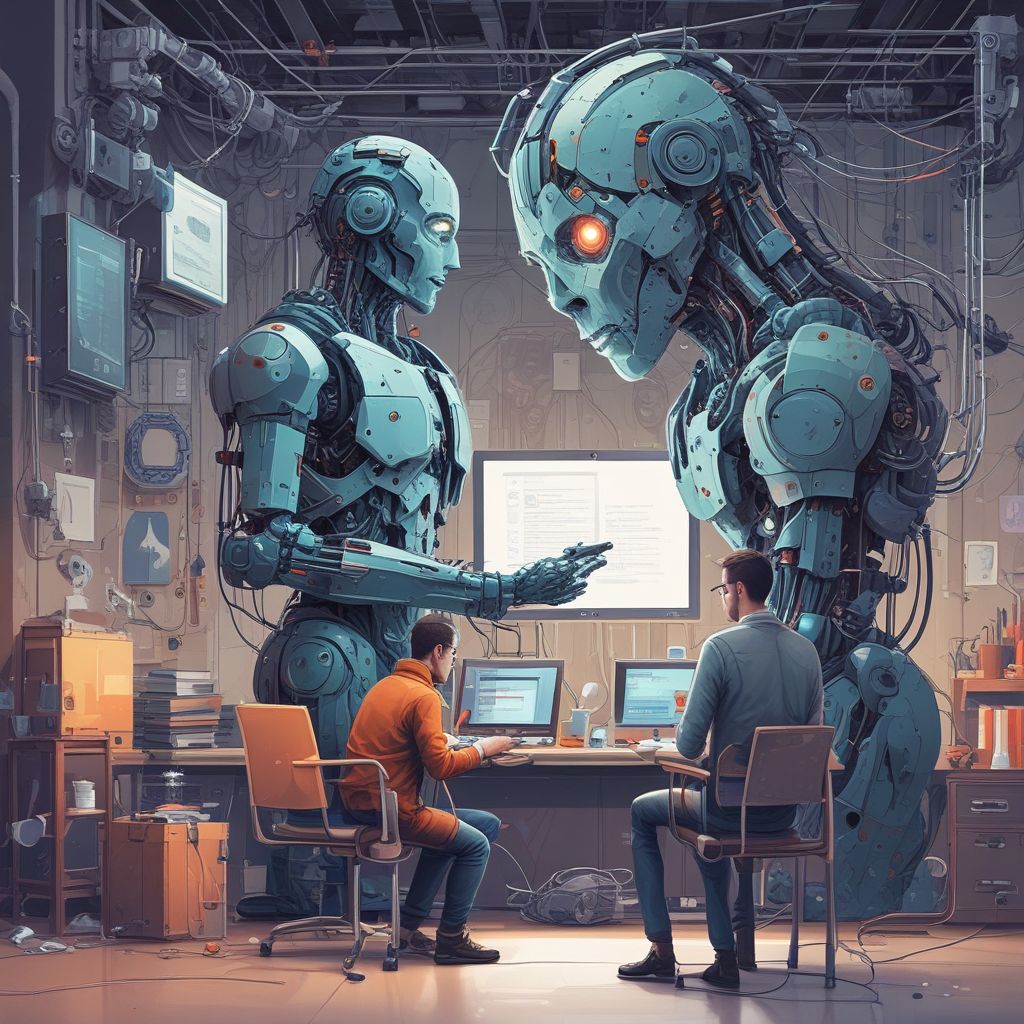

The rise of AI-assisted development has brought huge productivity gains — and a new class of risks. Securing AI-assisted development requires targeted policies, automated CI checks, and robust sandboxing strategies to prevent model-induced vulnerabilities, avoid secret leakage, and remove supply-chain risk.

Why AI-assisted development changes the security model

Traditional application security focuses on human mistakes, outdated libraries, and misconfiguration. With AI-assisted development, developers increasingly accept model-generated code, dependencies and snippets without full manual review. This introduces unique threats: hallucinated logic, insecure defaults, inadvertent inclusion of credentials, and recommended third-party packages that open supply-chain vectors.

Common model-induced vulnerabilities

- Hard-coded secrets and credentials suggested by models or copied from examples.

- Unsafe patterns (e.g., missing input validation, insecure deserialization) introduced by generated code.

- Dependency recommendations that pull from untrusted or trojanized packages.

- Over-permissive infrastructure-as-code generated with broad IAM policies.

Policy foundations: rules that every team should adopt

Policies set the guardrails for safe AI usage. A short, enforceable policy is better than a long handbook that nobody reads.

Core AI-assisted development policy items

- Never accept code with secrets: All model-suggested code must be scanned for keys, tokens, and private certificates before merge.

- Require human review: Any generated code touching authentication, cryptography, or network boundaries must undergo mandatory peer review.

- Whitelist dependency sources: Only allow packages from approved registries and pinned versions; block obscure or recently created packages without audit.

- Least privilege for IaC: Auto-generated infrastructure code must use policy-as-code gates (e.g., Sentinel, Open Policy Agent) to enforce narrow IAM roles.

- Audit logging: Log model prompts, responses used in commits, and which developer invoked generation tools for traceability.

CI/CD checks: automated defenses in the pipeline

Automate detection and enforcement so checks run consistently and quickly. Integrating these checks into CI prevents risky code from reaching production.

Essential CI checks for AI-assisted development

- Secret scanning: Run pattern-based and entropy checks (e.g., GitLeaks, TruffleHog) on diffs to catch accidentally introduced credentials.

- Static application security testing (SAST): Use SAST tools that detect insecure API usage, injection risks, and weak cryptography in generated code.

- Dependency analysis: Verify package signatures, compare to SBOM policies, and fail builds when unapproved or vulnerable packages are introduced.

- Policy-as-code gates: Block merges when IaC templates violate constraints (open network access, wildcard IAM, public buckets, etc.).

- Unit & fuzz tests: Fail fast if generated logic lacks tests or if fuzzing exposes unexpected failure modes.

Practical CI implementation steps

- Add a pre-merge job that scans the PR diff for secrets and flagged patterns.

- Run SAST and dependency checks in parallel to keep pipelines fast; cache results where possible.

- Enforce SBOM generation for every build and compare against an allowlist of approved licenses and vendors.

- Record the model artifact (prompt and response hash) as build metadata to enable retroactive audits.

Sandboxing generated code: safe execution and testing

Running model-generated code in isolated environments greatly reduces blast radius and allows safer validation.

Sandboxing best practices

- Ephemeral runner environments: Execute suggested scripts in ephemeral containers with minimal capabilities and no access to secrets.

- Network isolation: Deny outbound network access by default; allow controlled egress through proxies that log and filter traffic.

- Credential vaulting: Never inject production credentials into sandboxes; use synthetic or read-only test credentials provisioned from a secrets manager.

- Runtime policy enforcement: Use Linux seccomp, AppArmor, or container policies to limit syscalls and resource use during test runs.

- Behavioral monitoring: Capture system calls, file writes, and network attempts to detect malicious or unexpected behavior from generated code.

Developer workflows: safe and usable patterns

Security must be friction-minimal for adoption. Integrate safeguards into IDEs and documented workflows so developers follow them naturally.

Suggested developer workflow

- Generate a snippet or scaffold using the team’s approved AI tool configured to avoid secret suggestions.

- Run local pre-commit hooks that perform basic linting, secret scanning, and dependency checks.

- Open a PR that includes the prompt and model response (or a reference hash) so reviewers can inspect the origin.

- CI runs SAST, dependency checks, SBOM comparison and sandboxed unit tests; build metadata records model usage.

- Reviewer approves; merge only if all checks pass and the reviewer confirms no security risks remain.

Auditability and incident response

When something goes wrong, the ability to trace back to the prompt, model version, and context is crucial.

- Mandate storing prompts and responses used in production code as part of the commit or build metadata for 90+ days.

- Maintain a searchable index of generated artifacts, who requested them, and where they were used.

- Include AI-generated code scenarios in tabletop exercises to validate detection and remediation steps.

Balancing speed and safety

AI-assisted development accelerates delivery but must not shortcut security. Start with a minimal set of high-value checks (secrets, dependency allowlist, and mandatory reviews) and iterate. Monitor false positives and developer friction, adjust rules and tooling, and invest in developer training so teams can recognize and fix model-induced issues.

By codifying policies, automating CI checks, and running generated code in secure sandboxes, organizations can harness the benefits of AI while minimizing model-induced vulnerabilities and supply-chain risk.

Conclusion: Securing AI-assisted development is an engineering problem with social and technical solutions — adopt enforceable policies, integrate automated CI defenses, sandbox generated code, and keep detailed audit trails to safely scale AI in your development lifecycle.

Ready to secure your AI-assisted workflow? Start by adding secret scanning and a dependency allowlist to your next CI pipeline.