The promise of Self-Healing CI is simple but powerful: automatically detect flaky tests with historical flake analysis, use machine learning to pinpoint instability, split and isolate problematic tests, and orchestrate targeted reruns that repair pipelines without human triage. In this article we’ll explore how Self-Healing CI works, why it matters, and practical steps for building pipelines that cut triage time and keep builds reliably green.

Why flaky tests are a CI problem worth solving

Flaky tests — tests that nondeterministically pass or fail — erode trust in CI systems, slow developer feedback, and create a constant triage tax. Large engineering teams can spend hours chasing transient failures that aren’t caused by code changes, while pipeline queues and developer context switches multiply the cost. Rather than treating each flaky incident as a human troubleshooting exercise, Self-Healing CI treats flakiness as a data problem: collect, analyze, act.

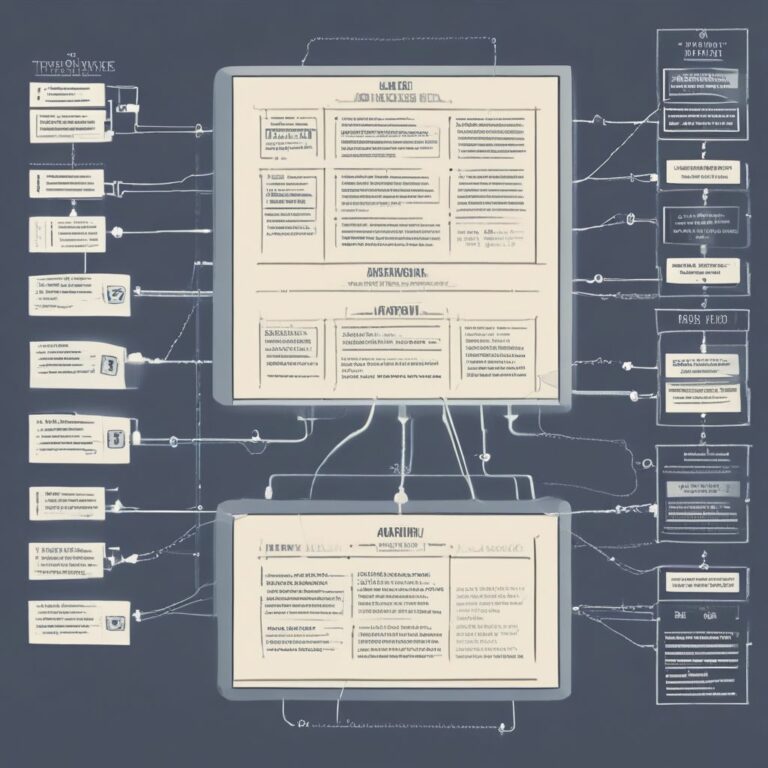

Core components of a Self‑Healing CI pipeline

A robust Self-Healing CI implementation blends four elements: historical flake analysis, ML-driven flake detection, test-splitting/isolation, and targeted reruns (or quarantines). Each component reduces uncertainty and automates a portion of the incident lifecycle.

1. Historical flake analysis: learn from the past

- Aggregate test results across builds, agents, and environments to establish baseline patterns.

- Compute metrics like flake rate, mean time between flakes, and environment correlation (specific OS, container image, network conditions).

- Use time-series views and changelogs to correlate flakiness with infra changes, dependency upgrades, or third-party API instability.

2. ML flake detection: surface likely flaky failures

Machine learning models enhance detection by combining features from historical analysis and real-time telemetry. Common approaches include:

- Supervised models that classify a failure as flaky vs. genuine using labeled historical data (test name, failure message, stack trace fingerprint, runtime, machine metadata).

- Anomaly detection that flags unusual failure patterns for a previously stable test.

- Ensemble scoring that weights recent trends more heavily to catch regressions quickly.

ML reduces false positives and helps prioritize which failures should trigger automated remediation (e.g., rerun) versus human investigation.

3. Test-splitting and isolation: make reruns cheap and precise

Large test suites conceal flaky tests among many stable ones. Test-splitting strategies reduce waste and speed feedback:

- Split by test file, test class, or shard to rerun only the smallest reproducible unit.

- Use deterministic sharding (by test ID hash) so reruns target the same environment constraints.

- Leverage isolation techniques (sandboxed containers, mocked services, network partitioning) to reproduce environment-specific flakiness.

4. Targeted reruns and automated repair

Not every failure warrants a rerun. Self‑Healing CI systems apply policy-driven actions:

- Conditional reruns: only rerun if ML model confidence, rarity of failure, and historical flake rate meet thresholds.

- Exponential backoff with a retry budget: run a small number of reruns to confirm flakiness without masking real regressions.

- Automated quarantine: automatically mark tests as quarantined or flake-priority and add annotations to test reports if repeated flakiness is confirmed.

Putting it together: a sample pipeline flow

- Developer pushes code; CI runs tests and uploads structured results to a test results database.

- Historical flake analysis computes updated metrics and feature vectors for each failing test.

- An ML model scores each failing test; high-score failures are labeled candidate flakes.

- Candidate flakes trigger small, targeted reruns using test-splitting to isolate the failure source.

- If reruns pass consistently, the system marks the initial failure as flaky and annotates the build; if reruns fail consistently, the failure becomes a developer alert with rich context for debugging.

Integration and observability

Self‑Healing CI works best when integrated tightly with existing systems:

- Test results database (structured logs, artifacts, failure fingerprints).

- Telemetry pipelines for agents, container images, and external services to surface correlations.

- Developer workflows: attach flake annotations to pull requests, automatically update issue trackers for quarantined tests, and surface suggested fixes (e.g., flaky test debugging checklist).

Operational safeguards and best practices

Automation must be conservative and auditable to avoid masking real regressions:

- Define clear retry budgets and time windows — don’t allow infinite retries.

- Require human confirmation to permanently quarantine critical tests or ignore failures for gated releases.

- Audit logs and explainability for ML decisions so developers understand why a rerun was performed or a test quarantined.

- Continuously retrain ML models with new labeled examples to reduce drift and improve accuracy.

Example outcomes and ROI

Teams implementing Self‑Healing CI often see measurable gains: lower mean time to repair (MTTR) for test-related builds, fewer false alerts, and reduced developer context switching. A targeted rerun strategy can convert 60–80% of transient failures into automated “ignored” incidents while preserving strict gating for true regressions.

Getting started: a pragmatic checklist

- Start by centralizing test results and building a small historical flake dashboard.

- Implement a basic retry policy with test-splitting to validate the cost-benefit of reruns.

- Pilot an ML classifier with a subset of tests and bake explainability into the model outputs.

- Iterate policies (retry limits, quarantine thresholds) based on telemetry and developer feedback.

Self‑Healing CI is not a silver bullet, but it does shift the balance from manual firefighting to data-driven automation. By combining historical flake analysis, ML detection, test-splitting, and targeted reruns, teams can dramatically reduce triage time and keep their builds reliably green.

Conclusion: Adopt Self‑Healing CI incrementally—start small, measure impact, and expand automation as confidence grows. Automated detection plus surgical reruns protect developer productivity while preserving test integrity.

Ready to cut triage time and keep your pipelines green? Start a pilot Self‑Healing CI run on your most flaky test suites today.