The rise of Observability-as-Code for CI/CD changes how teams ship software: instead of treating metrics, logs, and traces as afterthoughts, the pipeline treats telemetry as a first-class input—using telemetry contracts, SLO-driven gates, and lightweight ML to predict failures before deployments. This article walks through the concepts, practical patterns, and a staged approach to adopt telemetry-first pipelines that preempt pipeline outages and improve deployment confidence.

Why observability belongs in CI/CD

Traditional CI/CD focuses on unit tests, integration checks, and security scans. Observability-as-Code for CI/CD extends those checks to include runtime signals and historical telemetry so the pipeline can detect regressions that static tests miss. By encoding expectations about metrics and traces into the pipeline, teams can gate deployments not only on correctness but on operational health and reliability.

Benefits at a glance

- Early detection of regressions that only appear under production-like loads.

- Fewer rollback events by catching reliability risks before production traffic.

- Faster feedback to developers with concrete observability evidence tied to commits.

- Clear operational contracts between teams via versioned telemetry contracts.

Core building blocks

Telemetry contracts

Telemetry contracts define the signals a service must emit, their schema, and semantic expectations (for example, latency, error-count semantics, or custom span attributes). Treat them like API contracts: versioned, reviewed, and enforced during CI. Examples of contract assertions include “service X must emit request.latency histogram with labels {region, endpoint}” or “error codes must map to documented SLO categories.”

SLO-driven gates

SLO-driven gates evaluate pre-defined Service Level Objectives against historical baselines and short-term smoke tests. Instead of a pass/fail based only on synthetic tests, a gate considers whether a deployment will likely push SLOs below acceptable thresholds. Gates can be binary (block/allow) or advisory (require manual approval) depending on risk tolerance.

Lightweight ML for prediction

Lightweight ML models add predictive power: anomaly detection on metric time series, short-term forecasting for key metrics, and classifier models that map telemetry patterns to likely root causes. The emphasis is on simple, interpretable models (exponential smoothing, ARIMA, isolation forest) that can run quickly in pipelines and produce actionable signals like “60% probability of SLA breach within 30 minutes.”

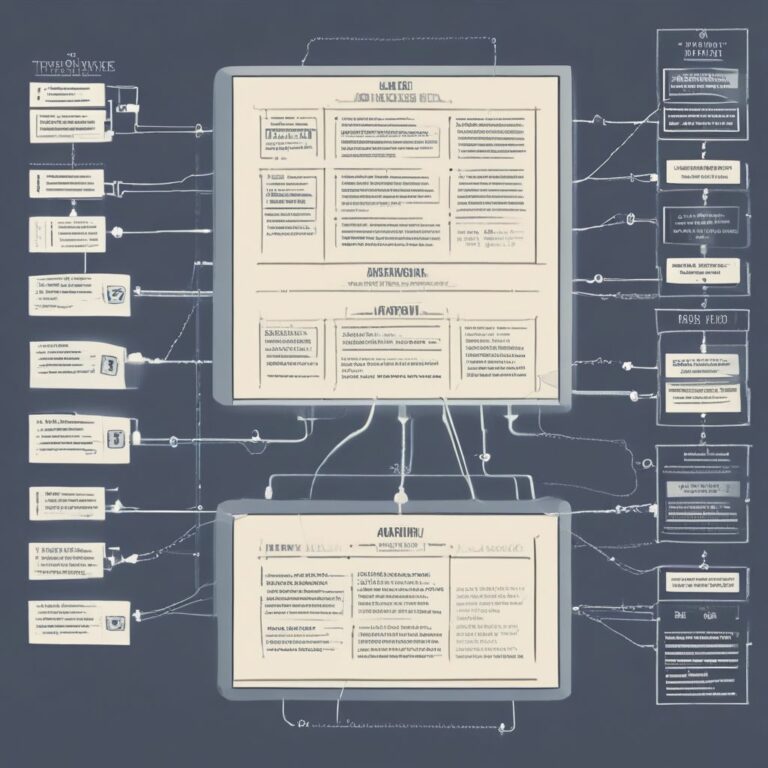

Architecture pattern: telemetry-first pipeline

A telemetry-first CI/CD pipeline typically includes these stages:

- Code & unit tests

- Build & containerize

- Static observability contract linting (validate telemetry contract changes)

- Staging deployment & smoke tests with synthetic traffic

- Telemetry collection from staging + short historical baseline pull

- SLO evaluation and anomaly detection

- Predictive model scoring and gate decision

- Canary / progressive rollout with continuous telemetry checks

Data flows and integrations

Essential integrations include:

- Metrics store (Prometheus, Mimir, or managed metrics)

- Tracing (OpenTelemetry collector + backend)

- Log ingestion (structured logs with schema enforcement)

- Feature store or simple historical store for ML models

- Pipeline runner hooks (e.g., GitHub Actions, Jenkins, Tekton) to call evaluation services

Practical steps to implement Observability-as-Code for CI/CD

- Start with telemetry contracts — codify required metrics, labels, and types in a YAML or JSON file alongside service code; add a linter to CI that fails builds when contracts are invalid.

- Define SLOs and baselines — choose 3–5 golden signals (latency, error rate, throughput, and an internal business metric) and define SLOs plus acceptable burn rates for releases.

- Add lightweight smoke tests — run synthetic scenarios against staging and capture the same telemetry the contract requires.

- Run quick anomaly checks — in the pipeline, compare staging telemetry to baseline using simple statistical tests or z-score thresholds; fail the gate on outliers.

- Introduce predictive scoring — train a simple model on historical incident windows to identify leading signals; use the model to score the staging run and produce a probability of near-term SLA violations.

- Automate gate decisions — combine contract validation, SLO pass/fail, anomaly checks, and predictive scores into a composite gate policy; encode policy in pipeline config so it’s auditable.

- Iterate and tune — measure false positives/negatives, tune thresholds and model sensitivity, and expand contracts as necessary.

Examples and heuristics

Example telemetry contract assertion: “95th percentile request latency for /checkout must be < 350ms over a 5-minute rolling window in staging.” Pipeline checks this after the staging smoke test and consults a baseline (last 7 days) before allowing promotion.

Heuristics to reduce noise:

- Use relative change thresholds (e.g., >30% increase over baseline) rather than absolute values when baselines vary.

- Prefer short, frequent checks instead of large, infrequent analyses to keep pipeline fast.

- Flag and quarantine telemetry contract changes for manual review if they increase risk.

Pitfalls and governance

Common pitfalls include overfitting ML models to noisy historical incidents, creating too-strict gates that block legitimate changes, and ignoring label or schema drift. Establish governance: version contracts, have a telemetry review board for contract changes, and maintain a “risk mode” for emergency releases that allows expedited approvals with postmortem requirements.

Measuring success

Key indicators of progress are reduced emergency rollbacks, faster mean time to detection (MTTD) for reliability regressions, and fewer incidents attributed to deployment regressions. Track gate pass/fail rates and the predictive model’s precision/recall to tune performance.

Adopting Observability-as-Code for CI/CD is an investment that moves operational concerns left into development workflows—making reliability a feature tested with every commit. By combining clear telemetry contracts, SLO-driven gates, and pragmatic ML, teams can catch many failure modes before they reach users.

Conclusion: Observability-as-Code for CI/CD turns telemetry into a proactive safety net—encode what matters, test it in the pipeline, and let predictive signals stop bad deployments before they hit production.

Ready to make your next release safer? Start by adding a telemetry contract to your repo and run a staging smoke test that validates it.