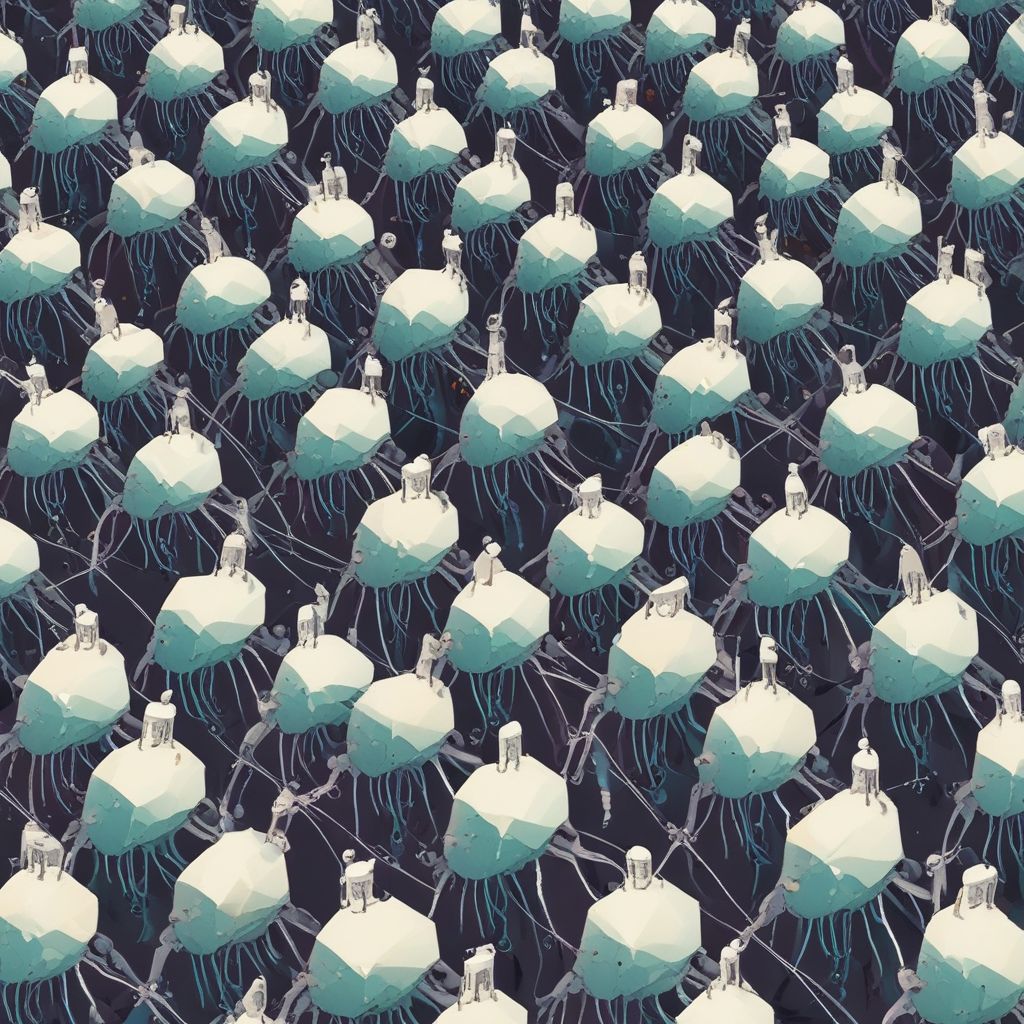

The rise of Micro-Collective AI marks a shift from single, monolithic large language models to distributed swarms of tiny models running on edge devices, enabling privacy-preserving, resilient, and ultra-low-carbon inference that scales with real-world constraints. In this article, explore what Micro-Collective AI means, why organizations are embracing federated and collaborative micro-model networks, and how product teams can design for this future while avoiding common pitfalls.

What is Micro-Collective AI?

Micro-Collective AI describes an architecture in which many compact machine learning models—each specialized and lightweight—collaborate across a network of edge devices or local servers to perform tasks that would traditionally rely on a single large model. Instead of centralizing knowledge and compute in a gigantic LLM hosted in the cloud, intelligence emerges from coordinated micro-models that share updates, votes, and aggregated outputs through federated mechanisms.

Core components

- Tiny models: Parameter-efficient models trained for narrow tasks (e.g., intent classification, sensor fusion, language fragments).

- Edge orchestration: Local schedulers that decide which micro-models run and how their outputs are combined.

- Federated updates: Privacy-preserving aggregation of model updates without centralized raw-data transfer.

- Collective inference: Protocols for ensemble voting, model chaining, or compressed knowledge transfer.

Why swarms beat monoliths in many settings

Micro-Collective AI is not about making LLMs irrelevant; it’s about choosing the right tool for constraints like energy, latency, and privacy. Here are the leading advantages:

Privacy by design

- Data stays local: federated learning and on-device inference keep user data on the device, reducing exposure and regulatory burden.

- Reduced telemetry: only model deltas or summary statistics are shared, not raw personal data.

Resilience and robustness

- Fault tolerance: if some devices are offline, others in the swarm continue to operate, preserving degraded but usable capability.

- Localized adaptation: micro-models adapt to local contexts (regional language, sensor characteristics) without retraining a global LLM.

Ultra-low-carbon inference

- Edge inference avoids large cloud compute cycles and the energy cost of repeatedly spinning up massive models for each request.

- Model specialization reduces parameter count and energy per inference, enabling battery-friendly AI on phones, sensors, and microcontrollers.

How Micro-Collective AI architectures work

Architectures vary by use case, but common patterns include:

Ensemble voting

Multiple tiny models generate predictions and a lightweight aggregator or consensus protocol chooses the final result—effective for classification and filtering tasks.

Model chaining

A pipeline of specialized micro-models processes inputs sequentially: e.g., a noise-robust audio detector → language fragment model → local domain intent model. This reduces the need for a general-purpose heavy model.

Federated meta-learning

Devices train local models on private data and share parameter updates to a coordinator that aggregates improvements into a meta-model; updated snippets are then redistributed, enabling continual learning without raw data exchange.

Key use cases

- Healthcare monitoring: Wearables run local anomaly detectors and share encrypted gradients for population-level model improvements while preserving patient privacy.

- Smart cities: Distributed sensors classify events locally, sending only alerts and model metrics to central systems to reduce bandwidth and preserve citizens’ privacy.

- Industrial IoT: Factory equipment runs specialized micro-models for predictive maintenance, enabling low-latency decisions without cloud round-trips.

- Assistive consumer devices: On-device language fragments and intent micro-models provide fast, offline-capable assistance for accessibility tools.

Challenges and how to mitigate them

Transitioning from monolithic LLMs to Micro-Collective AI introduces engineering and operational complexities; here are the main challenges with practical mitigations:

Model coordination and consistency

Challenge: Ensuring consistent outputs across heterogeneous devices and models.

Mitigation: Define clear aggregation protocols, versioned micro-model manifests, and periodic validation tests to align behavior.

Security and trust

Challenge: Federated updates can be poisoned or manipulated.

Mitigation: Implement secure aggregation, differential privacy, and anomaly detection on updates; use cryptographic signatures and provenance tracking.

Operational overhead

Challenge: Managing many small models can be more complex than a single deployment pipeline.

Mitigation: Invest in automation: model registries, OTA update channels, device telemetry dashboards, and resource-aware schedulers.

Design checklist for product and engineering teams

When designing a Micro-Collective AI system, follow this practical checklist:

- Prioritize tasks for micro-modelization—start with clear, narrow tasks that fit tiny models.

- Design federated training and secure aggregation from the outset to meet privacy goals.

- Implement fallbacks to cloud inference for low-frequency, high-complexity queries to balance capability and cost.

- Measure energy per inference and optimize for device battery constraints.

- Build monitoring for distribution drift and local model health to trigger updates automatically.

Looking ahead: hybrid ecosystems

Rather than a binary replacement, Micro-Collective AI and large models will coexist in hybrid ecosystems: tiny models handle routine, latency-sensitive, or private tasks at the edge while monolithic LLMs provide deep reasoning, summarization, or large-context tasks when privacy and carbon budgets permit. The future is about orchestrating across layers of intelligence to deliver the best user outcomes with minimal environmental and privacy cost.

Micro-Collective AI reframes intelligence as a collective property of many small, efficient units—an approach engineered for the constraints and values of the next decade.

Conclusion: Swarms of tiny models coordinated through federated and collaborative protocols offer a compelling alternative to monolithic LLMs when privacy, resilience, and ultra-low-carbon inference are priorities; adopting Micro-Collective AI requires careful orchestration but delivers durable, scalable value at the edge.

Interested in exploring Micro-Collective AI for your product? Contact a specialist to design a pilot and measure real-world energy, latency, and privacy gains.