The Beta-Feature Trap arrives quietly: teams eager for momentum let early users shape the roadmap until the original product vision is lost. In this article, the Beta-Feature Trap is examined through real-world dynamics, practical guardrails, and a repeatable framework that helps lean startups collect feedback without surrendering direction.

What is the Beta-Feature Trap?

The Beta-Feature Trap happens when early adopters — often vocal, technical, and highly engaged — push a product toward niche feature requests. Because early feedback feels urgent and the startup craves retention and evangelism, those requests are enacted rapidly and without strategic filtering. Over time the product drifts away from its intended audience or core value proposition, increasing complexity and reducing product-market fit for the majority of users.

Why it’s especially dangerous for lean startups

- High sensitivity: Small teams react quickly; every request can become a shipped feature.

- Survivor bias: Early adopters who stay are often unrepresentative of the broader market.

- Resource sink: Custom features add maintenance costs and technical debt.

- Vision erosion: Product decisions made to please a few accumulate into a different product entirely.

Signs your product is slipping into the trap

- Roadmap dominated by “customer asks” rather than hypotheses or outcomes.

- Feature bloat: a proliferation of one-off toggles and edge-case options.

- Support load grows faster than active user growth.

- Conflicting metrics: engagement on niche features but overall retention declines.

- Internal language shifts from “we’re building X” to “we’re building what Y wants.”

Core principle: Separate feedback collection from decision authority

Feedback is gold; unfiltered execution is a liability. The startup’s mission and product vision must act as the decision-making north star. Feedback informs experiments and hypothesis testing, but does not automatically create roadmap items.

Three simple rules to balance feedback and vision

- Filter: Every incoming request passes through product principles and a problem-statement lens. Ask: what problem does this solve, for whom, and how does it advance the core metric?

- Quantify: Convert requests into measurable hypotheses before building. Estimate affected user volume, lift to retention or revenue, and engineering cost.

- Experiment: Prefer lightweight experiments (feature flags, prototypes, A/B tests) rather than one-off production features.

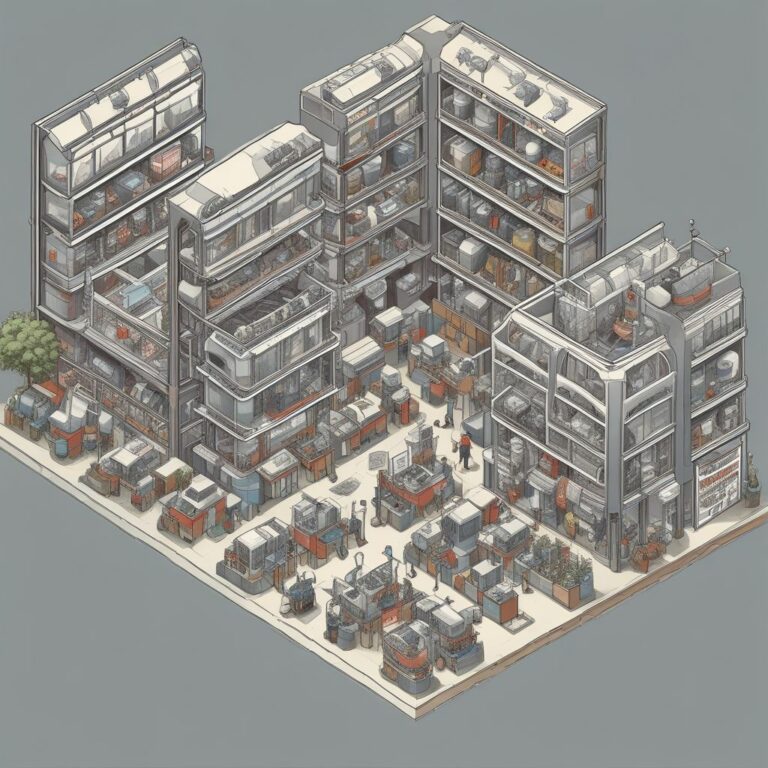

A practical framework: The Feedback Funnel

Turn chaotic suggestions into prioritized, testable work using the Feedback Funnel:

- Capture: Centralize feedback (support, sales, community). Tag by user persona and urgency.

- Classify: Label whether the ask is a bug, UX friction, enhancement, or new capability targetting a new persona.

- Validate: Look for corroborating signals — analytics, NPS, growth metrics, or repeat requests from multiple personas.

- Hypothesize: Define the desired outcome (e.g., reduce churn by X% for segment Y). Write a one-line hypothesis and metric.

- Experiment: Run the smallest test that could invalidate the hypothesis (click-through changes, mockups, toggled rollouts).

- Decide: If the experiment meets the success criteria, promote to roadmap; if not, document and close.

Tools and practices that make the funnel work

- Feature flags and staged rollouts — ship but control exposure.

- Telemetry linked to feature releases — know who uses what and whether it moves metrics.

- Feedback taxonomy in your CRM or issue tracker — searchability beats memory.

- Quarterly vision reviews — map accumulated asks back to long-term goals.

Decision filters and guardrails

Introduce concise guardrails to prevent mission creep:

- Three-filter rule: A request needs to pass two product principle filters and one metric/go-to-market filter to be considered for development.

- One-in, one-out policy: For each new cross-cutting feature added to core flows, retire or consolidate an existing feature to manage complexity.

- Adopter quotas: Limit the proportion of roadmap work that originates from a single customer segment (e.g., early adopters) to a set percentage per quarter.

How to engage early adopters without losing control

Early adopters are invaluable allies when treated as a testing cohort, not co-owners of the roadmap. Tactics:

- Create a “Beta Club” agreement: explicit expectations about experimental scope, support level, and technical debt.

- Offer configurable solutions rather than full product changes: plugins, webhooks, or power-user modes kept separate from the core UX.

- Charge for customization conservatively — paying customers get prioritization, which discourages endless free tweaks.

Case study sketch: how a tiny drift nearly killed a product

Imagine “NoteFlow,” built as a simple collaborative notes app for teams. Vocal power users demanded the ability to deeply customize formatting, embed specialized widgets, and a complex permissions matrix. Each request shipped quickly to keep them happy. Six quarters later NoteFlow had a massive settings panel, rising bug counts, and a churned mainstream market who found the app overwhelming. Recovery required a product rewrite and a renewed focus on core simplicity — expensive and reputation-damaging. The rescue started with a ruthless prioritization exercise and a public roadmap reset that clarified boundaries.

Quick checklist for product teams (start today)

- Document the top three user problems the product exists to solve.

- Centralize feedback and tag by persona within one tool.

- Create a hypothesis template and require it for feature proposals.

- Install feature flags and basic telemetry before the next big ship.

- Run a quarterly roadmap purge: remove low-impact cruft and consolidate similar features.

Measuring success: metrics that matter

Swap vanity signals for leading indicators: retention cohort curves, time-to-value, feature adoption curves for core flows, and cost-to-serve per active user. Track the percentage of roadmap items that are hypothesis-driven versus reactive requests, and aim to increase the hypothesis share each quarter.

Avoiding the Beta-Feature Trap doesn’t mean ignoring users — it means treating their requests as experiments, not mandates. With a clear vision, simple decision rules, and lightweight experiments, startups can harness early-adopter energy without being redirected by it.

Conclusion: The Beta-Feature Trap is avoidable: protect your product vision, systematize feedback into tests, and let data, not urgency, decide what becomes part of your core. Lead with principles, ship with control, and iterate with evidence.

Ready to reclaim your roadmap? Start by running a 30-day Feedback Funnel audit and reduce feature drift this quarter.