Event-Sourced Indexes unlock relational query capabilities on document and key/value databases by streaming every mutation into a CDC-driven pipeline that builds materialized views — the core idea behind Event-Sourced Indexes is to let change streams power real-time, SQL-like queries without compromising primary write throughput.

Why use an event-sourced index layer?

NoSQL databases excel at high-throughput, low-latency writes, but they lack rich relational query semantics and analytical indices. Instead of forcing relational features into the write path (which harms throughput), an event-sourced approach captures changes as an append-only log and asynchronously projects them into read-optimized materialized views.

Benefits at a glance

- Separation of concerns: writes remain fast and simple; reads are served from optimized views.

- Real-time analytics: change streams allow near-real-time updates to indexes and aggregates.

- Reproducibility and auditability: append-only events make it easy to replay or rebuild views.

- Scalability: stream processors can parallelize view maintenance independently of the primary store.

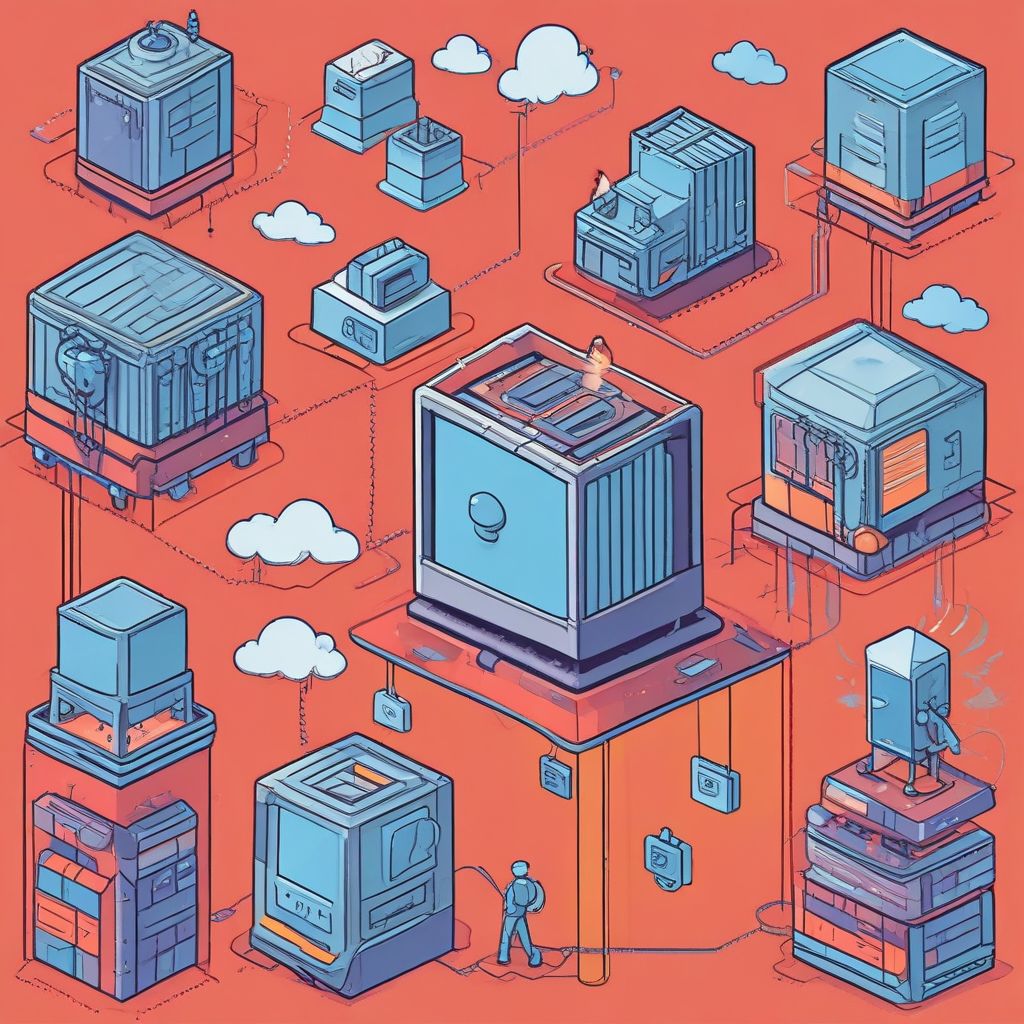

High-level architecture

A robust CDC-driven materialized-view layer typically has four components:

- Change Capture — native change streams (e.g., MongoDB, DynamoDB Streams) or WAL tailing via connectors such as Debezium.

- Event Bus — a durable log (Kafka, Pulsar) that buffers and partitions events for downstream consumers.

- Stream Processor — stateless/stateful processors (Kafka Streams, Flink, ksqlDB) that compute projections, joins and aggregates.

- Materialized Read Store — read-optimized indices or stores (Elasticsearch, columnar files, relational cache) serving SQL-like queries.

Designing change events

Design minimal, stable event payloads to protect write performance and downstream flexibility:

- Emit concise events: primary key, operation type (insert/update/delete), relevant fields and timestamps.

- Prefer logical events over raw snapshots when possible to reduce event size and processing cost.

- Include schema version and correlation IDs to support schema evolution and debugging.

Projection and indexing strategies

Materialized views can implement a range of relational features — secondary indexes, join tables, time-windowed aggregations — using different projection patterns:

- Upsert projections: process events into target indices using idempotent upsert semantics so replays are safe.

- Incremental aggregations: maintain counts/sums using delta processing rather than full recomputation.

- Join projections: denormalize relationships into a read model to serve fast SQL-like joins.

- Search indexes: transform documents into flattened search records for full-text or multi-field lookup.

Key implementation details and best practices

1. Preserve write throughput

- Keep the capture mechanism asynchronous and append-only; never block the primary write path on view updates.

- Emit lightweight events and offload heavy computation to the stream processor.

- Partition events by hot key to parallelize processing while avoiding single-threaded bottlenecks.

2. Idempotency and ordering

Exactly-once semantics are ideal but expensive; aim for idempotent projections and deterministic ordering per key:

- Use event sequence numbers or timestamps to apply updates in order.

- Design materializer writes as idempotent upserts keyed by entity id + event version.

3. Schema evolution and versioning

- Attach schemaVersion to events and use a schema registry to decode old events safely.

- Support gradual migration by allowing processors to read multiple schema versions and emit a unified projection.

4. Backfill and replay

Rebuilds are a feature, not a bug. Keep event retention or snapshots to allow efficient backfills:

- Support snapshotting of source data to accelerate full rebuilds when event history is truncated.

- Implement checkpointing so processors can resume from the last committed offset after failure.

5. Observability and monitoring

Track lag, processing rate, error rates and cardinality growth. Instrument end-to-end latency from source commit to read-store visibility and alert when lag crosses thresholds.

Querying: SQL-like capabilities on the read side

With event-sourced materialized views, the read store can present powerful SQL-like interfaces:

- Expose denormalized tables for joins and foreign-key-like queries.

- Maintain rolling aggregates and time-series tables for analytics dashboards.

- Provide read-time SQL translation layers that map queries to view tables or fallback to the primary store when necessary.

Failure modes and recovery

Common failure scenarios include processor crashes, network partitions, and poisoned messages. Mitigations:

- Checkpoint frequently and make processors idempotent so retries are safe.

- Isolate and quarantine malformed events for manual inspection rather than blocking the pipeline.

- Design for replayability: persistent offset tracking plus event compacting where needed.

Operational tips for scale

- Partition work by natural keys and scale consumers horizontally to maintain throughput.

- Batch writes to the read store to reduce IOPS and amortize overhead while keeping latency constraints in mind.

- Use tiered storage for large aggregates (hot read store for recent data, cold object storage for older snapshots).

Real-world toolchain

A practical stack might combine native change streams or Debezium for capture, Kafka as the durable event bus, Kafka Streams/Flink for projection logic, and Elasticsearch or a columnar store for materialized views. This combination gives fault tolerance, partitioned parallelism and flexible projection semantics while leaving the primary NoSQL store optimized for writes.

Event-Sourced Indexes are a pragmatic pattern: they provide relational and analytical power on top of schemaless datastores while preserving the essential performance characteristics of the primary write path.

Conclusion: By decoupling capture, processing and serving via CDC-driven materialized views, systems gain SQL-like query capabilities and real-time analytics on NoSQL platforms without compromising write throughput. Start by designing compact events, enforcing idempotent projections, and building robust observability into the pipeline.

Ready to transform your NoSQL store into a real-time, SQL-capable analytics platform? Explore a small pilot using native change streams + a stream processor this week.