The rise of introspective robots in factories and warehouses demands transparency — and that’s where Explainable Action Logs become mission-critical. Explainable Action Logs are structured, human-readable records that pair every pick, path, and pause with a clear rationale, enabling faster maintenance, cleaner audits, and smoother human–robot collaboration. This article explores architectures, design patterns, and practical guidelines for implementing these logs so that automation is not just fast, but also understandable and trustworthy.

Why explainability matters for industrial robots

Traditional robotic logs record low-level telemetry and error codes; they are optimized for machines, not people. When a part is mispicked or an AGV pauses unexpectedly, technicians and auditors need more than a stack trace — they need the “why.” Explainable Action Logs translate sensor streams, planner decisions, and confidence scores into narratives that humans can read and act upon. The benefits are tangible:

- Faster maintenance: Technicians get clear causes and suggested fixes instead of guessing from cryptic codes.

- Regulatory compliance and audits: Explainable records make it easier to demonstrate safety procedures and decision chains.

- Human–robot collaboration: Operators can understand robot intent and intervene at the right moment.

- Continuous improvement: Teams can mine rationales to improve planners, perceptions, and policies.

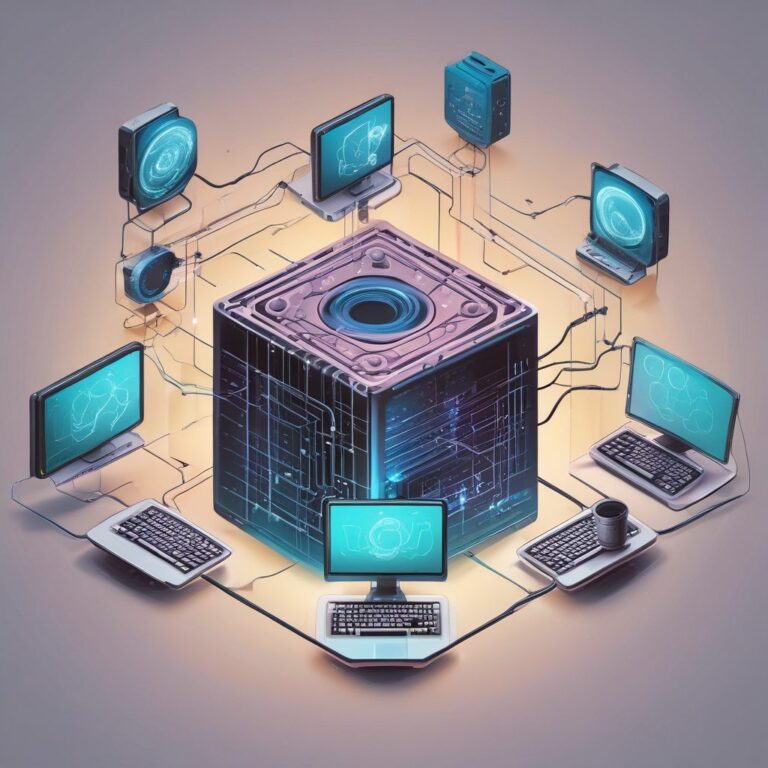

Core components of an Explainable Action Log architecture

Implementing explainable logs requires a few modular components working together. Treat the logging subsystem as a first-class service, not an afterthought.

1. Action emitter (low-level)

This component emits events for every actuator command and state change: gripper open/close, arm trajectory start/stop, velocity and pose updates, and pauses. Each event includes precise timestamps, contextual IDs (task ID, operator ID), and sensor snapshots or links to them.

2. Rationale generator (middle layer)

A lightweight inference engine attaches a human-readable explanation to events. It can use rule-based templates, model-derived explanations (e.g., “vision confidence 0.42 → replan”), or a hybrid causal model that links perception, constraints, and objectives. Key fields produced include intent, cause, confidence, alternative actions considered, and corrective suggestions.

3. Structured logger and storage

Store logs as structured entries (JSON-LD or a schema-compatible format) to enable indexing, search and tamper-evident storage. Each entry should be queryable by time range, robot ID, task ID, or rationale type. Use append-only stores with cryptographic signatures for audit integrity.

4. Human-friendly interfaces

Expose summaries, timelines, and drill-down views in a web dashboard or in-plant tablet. Make logs readable by maintenance crews with quick filters: “show low-confidence picks” or “pauses longer than 2s with no operator override.”

Design principles for clear, useful rationales

- Conciseness and relevance: Prefer short, actionable statements over verbose explanations. Include the most relevant cause and one corrective suggestion.

- Structured and layered: Combine a one-line summary with optional technical details for engineers (e.g., sensor frames, stack traces, model inputs).

- Confidence and alternatives: Always include confidence scores and, when appropriate, the top alternative actions considered by the planner.

- Context-aware phrasing: Tailor language by audience — operators receive plain-language suggestions, auditors get compliance references, and developers get raw telemetry links.

- Tamper-evidence: Sign and checkpoint logs for lawful audits.

Example log entry (conceptual)

{

"timestamp": "2026-01-14T06:45:12Z",

"robot_id": "arm-03",

"task_id": "pick-f0123",

"event": "pick_attempt",

"rationale": {

"summary": "Skipped planned grasp due to vision low confidence (0.38); chose alternative edge grasp.",

"cause": "Occlusion detected by camera_2; depth variance increased beyond threshold.",

"confidence": 0.38,

"alternatives_considered": ["planned_center_grasp (conf=0.42)", "edge_grasp (conf=0.38)"],

"suggested_action": "Inspect camera_2 for dirt or misalignment; consider reorienting part."

},

"links": {

"camera_snapshot": "s3://factory-logs/2026/01/14/arm-03/cam2/064512.jpg",

"trajectory": "s3://factory-logs/2026/01/14/arm-03/traj/064512.json"

}

}This entry pairs machine-precise data with a human summary and an explicit next step for maintenance or operators.

Practical considerations and trade-offs

While explainable logs offer clear benefits, architects must balance cost, latency, and privacy:

- Compute and latency: Generating deep explanations can be compute-heavy. Use a two-tier approach: short, local rationales for immediate use and richer cloud-generated reports for after-action review.

- Storage and telemetry volume: Sensor snapshots and full trajectories can inflate storage; prefer sampled snapshots and links to raw blobs.

- Privacy and IP: Mask or redact proprietary details in logs when sharing with third parties; maintain access controls.

- Standards and interoperability: Adopt extensible schemas (e.g., JSON-LD contexts, OpenTelemetry tags) so logs integrate with MES, CMMS, and audit tools.

From logs to continuous improvement

Explainable Action Logs should feed analytics and learning pipelines. Aggregated rationales reveal failure modes: persistent low-confidence picks in specific bins, recurring pauses at chokepoints, or planner brittleness in lighting changes. Use this data to prioritize sensor maintenance, retrain perception models, or redesign cell layouts. Over time, these mechanisms close the loop between operations and R&D, raising uptime and safety.

Final checklist for deployment

- Define a minimal viable schema that includes summary, cause, confidence, and suggested action.

- Implement local, low-latency rationale generation and an asynchronous richer-report pipeline.

- Provide UIs tailored to operators, auditors, and developers.

- Ensure checksum/signed append-only storage for critical audit trails.

- Set retention and redaction policies that meet regulatory and business needs.

Explainable Action Logs are not a finish line but a foundation: they make robot behavior legible, reduce mean time to repair, and build the human trust necessary for deeper collaboration with automation systems.

Conclusion: Embedding Explainable Action Logs into industrial robots transforms raw telemetry into actionable, human-readable rationales that accelerate maintenance, simplify audits, and enable effective human–robot teamwork. Start small with concise rationales, iterate the schema, and let logs drive continuous improvement across the plant.

Ready to make your robots intelligible? Start designing a minimal Explainable Action Log schema for your next deployment.